Are Text-to-Image AI-Systems the Solution for All Visualization Challenges?

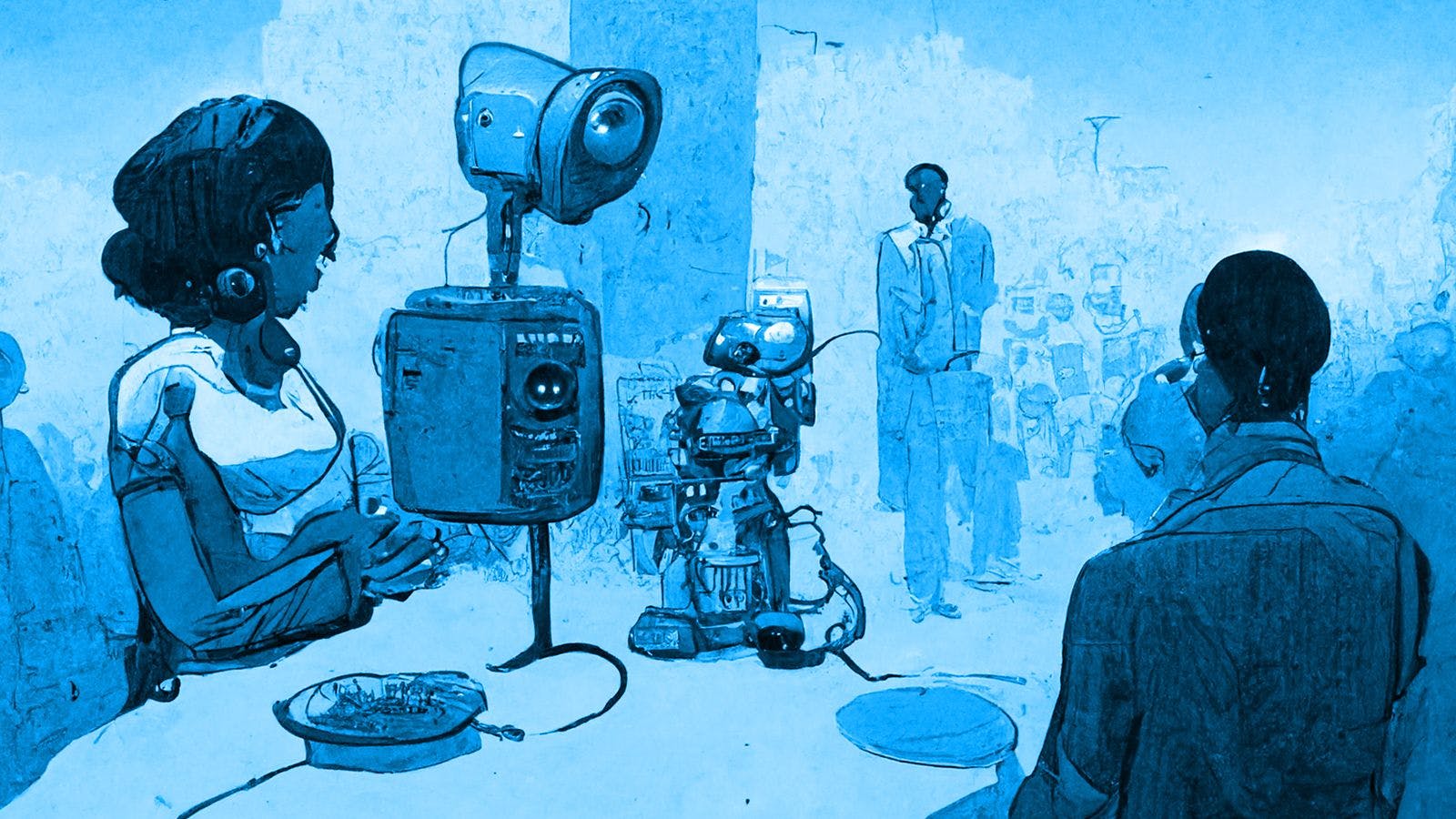

,For the new website of DW Innovation we needed images to visualize the topics we work on. These terms are rather abstract, and we have always had trouble finding the right images. So, we decided to give it a go with the state-of-the-art AI technology called text-to-image.

What is this, text-to-image?

Imagine you could create any visual you would like, without limitations, without knowing how to paint, draw, design or photograph. Impossible? Not with the most recent developments in artificial intelligence. The so-called text-to-image applications are machine learning models which use a natural language description and produce an image based on that description. They are based on deep neural networks: highly advanced computer systems that can understand human language (mostly in written form) and transform those words into a visual representation.

Starting around 2010 these systems have progressed so much, that in 2022, models such as OpenAI's DALL-E 2, Google Brain's Imagen and StabilityAI's Stable Diffusion are getting very close to producing outcome in the quality of real photographs and human-drawn art.

How does it work?

In general, these text-to-image models work in two steps. They use a language model to transform the input text into a data-based representation and then a generative image model turns that representation into an image. Most engines start with a seed, a randomly generated color mix and then turn this step by step into the final image. The way they know what to create is based on the data they have been trained on. Usually, we talk about massive amounts of images and text data scraped from the web.

On a practical level you start by describing the image you have in mind to the system. This can be as short as “New York on a spring day” or much longer, describing layers of layers as well as quality (e.g. “high-level details” or “blurry background”) or format aspects (e.g. “graphic novel style” or “35mm photography”) of your idea. This description is called ‘the prompt’.

What’s very impressive is that these systems can even copy the styles of former and existing artists and apply them to old images or to the ones you are just trying to create (e.g. “in the style of Picasso”).

Does it solve all your problems?

One would think if only my mind is the limit to these images, it should be the one-for-all solution. But as with all AI-systems, we’re not there yet. Yes, the systems can interpret all input and create respective outputs, but they are in the learning phase. A computer system still doesn’t know exactly what “happy” means – it can only relate to it through previously seen images of e.g., smiling people that were described or tagged as happy. Therefore, the outcome of a prompt like “happy people” is always just a mixture of a thousand images of smiling people that the AI puts together in a new way.

Remember the abstract topics that we wanted visualized for our blog? We ran into the same problem as always. We got results that were similar to the ones google (or any other search engine) would have given us for "innovation”: lamps, people pointing at whiteboards, a group of hip youngsters with lots of MacBooks on an old wooden table. So, we had to make our prompts more sophisticated, give the AI more details about what we think innovation or Human Language Technology actually is. Luckily, with some creativity we found our way. The benefit being that we only needed to apply some color filters to create a series of fitting images.

What do you need to learn and try it yourself?

You should start by choosing your platform, the most popular ones currently being OpenAI’s Dall-e, MidJourney, StableDiffusion’s Dreamstudio and Craiyon In principle they all do the same and are at similar quality levels. But since the algorithms behind them work differently and since they have probably been trained on different materials, the outcomes are not all the same. Find the one you like best and go with that one.

The quickest way to make this decision is also the best way to learn: just watch what other people are doing on the platforms. All of them make their results publicly available, so you can scroll through the artwork of other users and decide what you like and what not.

This also helps you to find new prompts and to better understand how to best feed the system. Not all descriptions work equally well.

The tip of all tips to get started

Expect everything and nothing, be creative in your way of describing what you want and bring some patience along. Your first results are most likely going to be impressive, but not exactly what you had in mind.

And do not forget to check out the topics that we work on at DW Innovation.